Make work easier, faster, and more enjoyable. Put your internal documentation at the fingertips of your employees.

Find What Matters, When It Matters with AI Enterprise Search Solutions

.webp)

%20copy.webp)

%20copy%203.png)

%20copy%202.webp)

%20copy%206.png)

.webp)

%20copy.webp)

%20copy%203.png)

%20copy%202.webp)

%20copy%206.png)

.webp)

%20copy.webp)

%20copy%203.png)

%20copy%202.webp)

%20copy%206.png)

.webp)

%20copy.webp)

%20copy%203.png)

%20copy%202.webp)

%20copy%206.png)

Connecting to context with custom AI enterprise search solutions

Intelligent document retrieval for real-world impact.

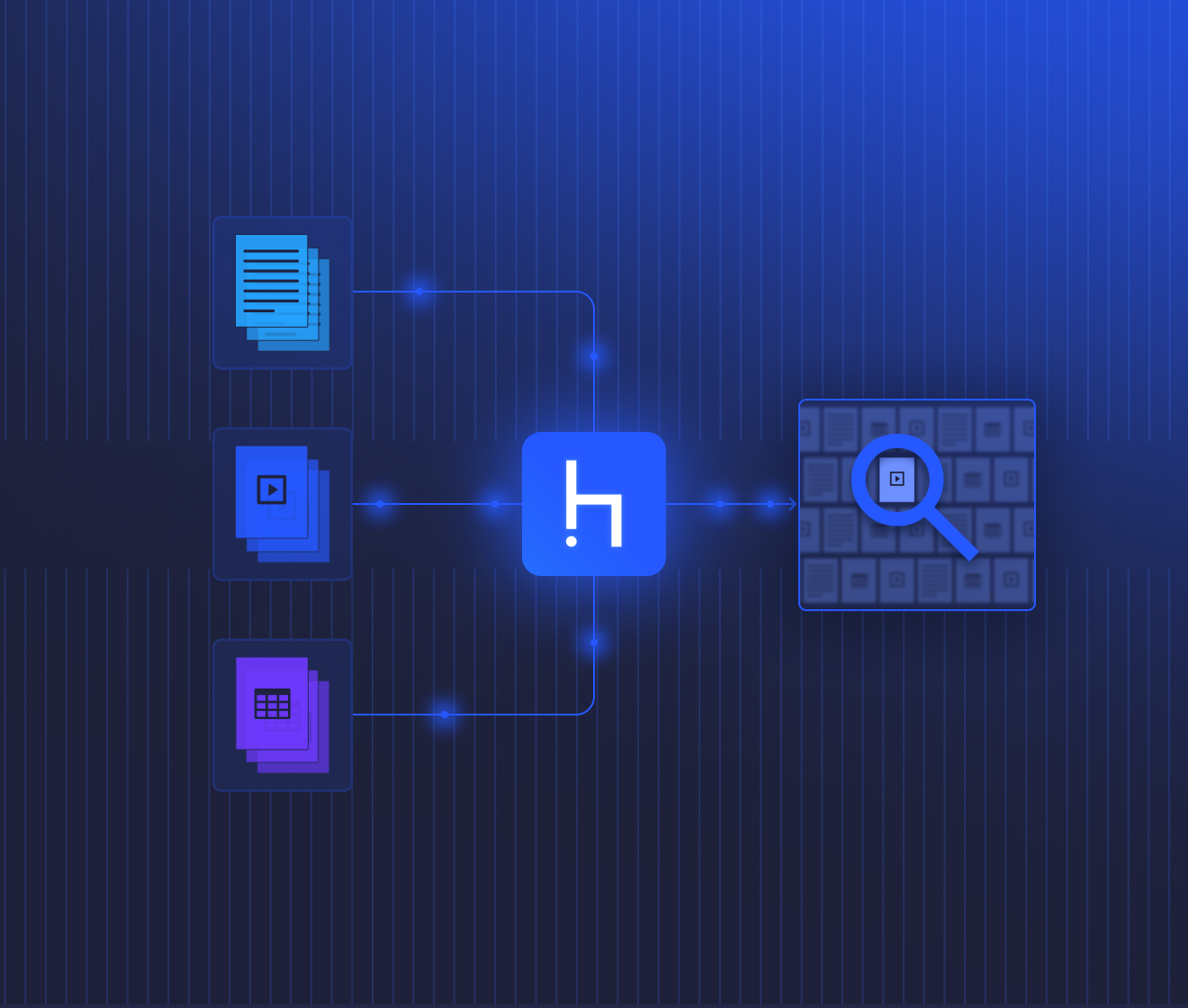

Find internal content instantly

Give users superior search capabilities

Create search systems that will help your customers find the information they need – through intuitive, natural language interfaces.

Make connections among datapoints

Suggest related content, or aggregate content for easier consumption.

Adapt to changing user needs

Enhance your search capabilities with metadata, feedback, and customization.

AI everywhere

Build increasingly powerful knowledge management systems on top of your document search.

SCALABLE, SAFE AND SECURE

FAQ

What is semantic search?

Semantic search uses AI to understand the meaning behind search queries and retrieve contextually relevant results from unstructured data such as text, images, and audio/video files. It can be combined with keyword-based methods like BM25 in a hybrid setup to deliver more accurate and meaningful search experiences. With deepset, you can build and customize advanced semantic search pipelines to meet your business needs.

How does semantic search improve user experiences?

Semantic search improves the employee and customer experience by delivering accurate, contextual results that match user intent. Hybrid search blends keyword and semantic methods to improve search accuracy and ensure relevant results while minimizing noise. This makes it valuable for applications such as content and commerce sites, knowledge management, customer support, research, and automation. These more advanced AI applications often use semantic search as an under-the-hood context-finding tool.

What are the use cases for semantic search?

Semantic search is ideal for enterprise search, customer support knowledge bases, legal document retrieval, product discovery, and personalized content or commerce recommendations. Its hybrid retrieval capabilities allow it to handle complex queries and diverse data types, making it indispensable in industries such as retail and consumer products, media and publishing, finance, healthcare, legal, and technology.

How can I implement semantic search in my organization?

Implementing semantic search means integrating AI-powered search into your data sources and workflows and/or customer experiences. With pre-built templates for hybrid or semantics-only search in multiple languages, deepset enables you to jumpstart your search pipelines and further optimize them to meet your industry and business needs, ensuring scalability and accuracy.

How can I customize semantic search for my business?

Customizing semantic search involves configuring components such as retrievers, rankers, and query processors to match your unique data and goals. With deepset's modular framework and hybrid retrieval capabilities, you can improve search accuracy, relevance, and scalability to deliver solutions that meet your end-user and business objectives.