TLDR

- MANZ Genjus KI is an AI-powered legal copilot built with deepset for legal professionals managing complex legal research

- Legal professionals previously spent hours searching hundreds or thousands of documents to build complete case views

- MANZ partnered with deepset to enhance Genjus KI with Fokus, a legal AI agent feature that automates research workflows

- Fokus delivers better-quality answers in minutes by routing queries to internal databases and web sources, synthesizing findings into grounded, cited responses.

Key Metrics:

- 6 weeks to exceed annual revenue targets

- 63 million legal documents

- 77% boost in search recall

- 20% accuracy improvement than traditional search tools

Lawyers, judges, and other legal professionals must build a well-rounded picture of a legal question by looking at all relevant documents. Depending on the complexity of the question, this can require searching hundreds or even thousands of documents – a considerable investment in time and resources.

MANZ, a legal publishing house based in Austria, leveraged deepset to build an AI-powered legal system tuned to their industry domain, data, and compliance requirements.

They began with text similarity and retrieval-augmented generation (RAG) use cases to modernize legal search, then enhanced it with an agent-based architecture that accelerates law research by iteratively searching and evaluating content across diverse data sources to generate better quality answers.

The Challenge: Information Management

MANZ’s online legal database, RDB Rechtsdatenbank, is a paid offering that assists law firms in legal research. Home to over 3.5 million documents, from legal rulings and contractual clauses to other published content, it is updated on a daily basis.

The basic search function in RDB lets users input a query and returns all documents from the database that match the search terms.

However, as Alexander Feldinger, Product Manager at MANZ, explained: 'Using one document doesn’t answer your question. It’s important to look at all the relevant documents — you don’t want to miss anything because that could have a negative effect on your work.”

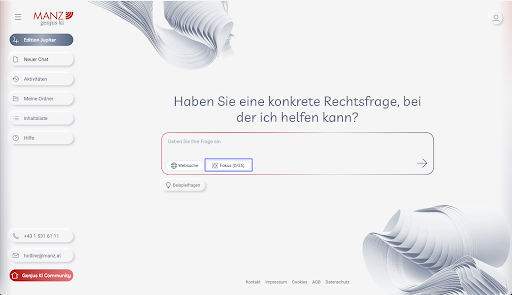

The Solution: MANZ Genjus KI

MANZ’s product and engineering teams leveraged deepset to build MANZ Genjus KI, an AI-powered legal copilot that can plan, adapt, and safely accelerate legal research. The system conducts searches, synthesizes answers, and generates summaries. After beta testing with over 4.000 legal experts, the system launched and now reduces legal research time for thousands of professionals.

MANZ built a sophisticated AI pipeline using the Haystack Enterprise Platform, built on Haystack, our open-standard framework. The key components of the solution included rankers, extractors, summarization, and source verification. This modular architecture unlocks greater accuracy while maintaining transparency.

The initial solution focused on two main use cases:

- Text Similarity: This involves using embedding and vector database management to handle nuanced legal inquiries, support iterative discussions, and maintain contextual understanding across sessions. Achieving a 77% boost in search recall that helped users find the right information faster.

- RAG Chatbot: A retrieval-augmented generation chatbot that synthesizes answers from complex legal questions and iterative discussions based on historical queries using pre-processed proprietary and open source data.

Even with RAG providing value, by analyzing interactions in the platform, MANZ was able to see an opportunity to further streamline the experience. They found a repeating pattern: users often asked multiple follow-up questions to their original question to build a full view of cases. This insight led MANZ to explore agentic capabilities.

Fokus: A Legal AI Agent within Genjus KI

MANZ and deepset jointly developed 'Fokus’, a legal AI agent that leverages the text similarity and RAG foundation to deliver higher-quality answers. By splitting up detailed questions, routing queries to internal legal databases or web sources, and synthesizing findings into grounded, cited responses. The system automates research steps that previously required manual refinement, significantly accelerating research from hours to minutes.

MANZ's agentic architecture enhances accuracy through two key innovations. First, it ensures retrieved legal references are still valid. For example, when the system detects outdated legal standards, it automatically retrieves the current law on the same topic. Second, it leverages MANZ’s extensive legal metadata to map relationships between precedents, statutes, and commentary, ensuring practitioners understand how rulings connect rather than viewing them in isolation.

Customer Benefits

The initial pilot demonstrated 20% higher accuracy compared to traditional tools. Following the launch, MANZ exceeded its annual revenue target within six weeks by selling hundreds of seats.

The solution solves critical industry-specific requirements, including:

- Transparency and References: Provides direct access to full source documents, enabling teams to instantly validate AI-generated research and build confidence in the results.

- Contextual Continuity: The legal copilot maintains state across iterative discussions, preserving context in both RAG and agentic pipelines. This enables practitioners to build on previous queries without losing context.

- Legal Nomenclature: The solution is fine-tuned with an embedding model specialized for legal terminology, ensuring that the AI understands and accurately processes the specific language used in legal documents. This specialization is essential for delivering precise and relevant results in legal research.

"With deepset, we have established a partnership characterized by mutual respect and professional equality. Their expertise, prompt responsiveness and transparent communication regarding ideas, adjustments and issues have proven to be invaluable" - Alexander Feldinger, Product Manager at MANZ

Get started on your ai journey today.

Table of Contents