Case study

Build Smart Conversational Agents with the Latest Chatbot and Question Answering Technology

19.10.21

Right now, there are more chatbots out there than ever — you've probably seen them embedded in many of the websites that you visit. This great adoption is driven by different tools but especially by Rasa, the leading conversational AI platform.

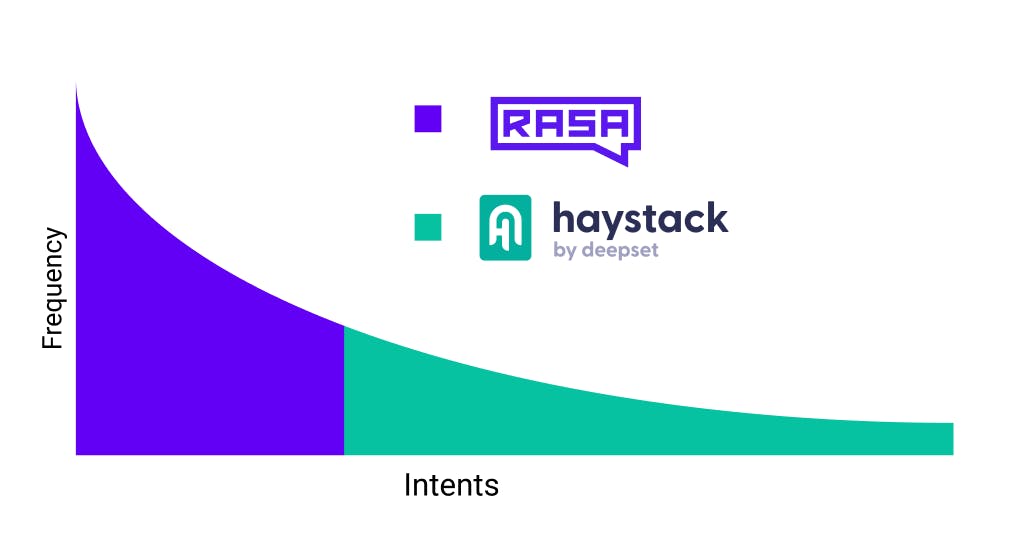

If you've ever built a chatbot yourself, you probably encountered at some point a fundamental challenge: It's hard to anticipate all possible "intents" a future user might have. However with the rise of question answering technology, there's a new option for tackling the long-tail of those informational intents, that are not specific to a user (e.g. "How to change my password?").

Today, we're going to show you how to combine the best that both worlds have to offer. By following this guide, you're going to learn how to build a chatbot that can identify either an information seeking intent from a user or a fallback intent, perform question answering on a large scale database of documents and then compose a well informed answer. Of course, we are going to keep it open source. That's why we'll be using Haystack and Rasa.

What is a Chatbot Powered by Question Answering?

Modern chatbot frameworks provide the tooling to turn free-form conversational text into structured data allowing for context dependent responses. Rasa chatbots achieve this by using fine-tunable machine learning models that assign an intent to each user utterance. For each intent, the bot has the option to trigger a range of actions depending on the situation or conversational flow in order to compose a response. To give a simple example, a user utterance like "Where are you from?" might be labeled with an intent like location_question which triggers the response utter_location by which the user would see something like "I'm based in the cloud" as the answer.

Conversations are often predictable and fall into certain common patterns but some conversations can take many twists and turns. Well-designed AI systems can cover many of the popular topics that come up but it is near impossible to define all the directions in which a conversation can go. Question answering is a powerful and flexible tool for handling those intents where relevant information needs to be smartly extracted from an existing set of documents.

Chatbots can already label a question like "What is the capital of Australia?" as an information seeking request. However, a chatbot that is augmented with question answering capabilities can look through a Wikipedia corpus, make sense of relevant paragraphs and extract "Canberra" as an answer. There doesn't need to be a well structured knowledge graph or database running the back. Rather, using the power of NLP via a framework like Haystack, this chatbot is able to pull information directly out of unstructured text. The result is a more robust chatbot that is capable of handling the long tail of informational user requests.

Core Components

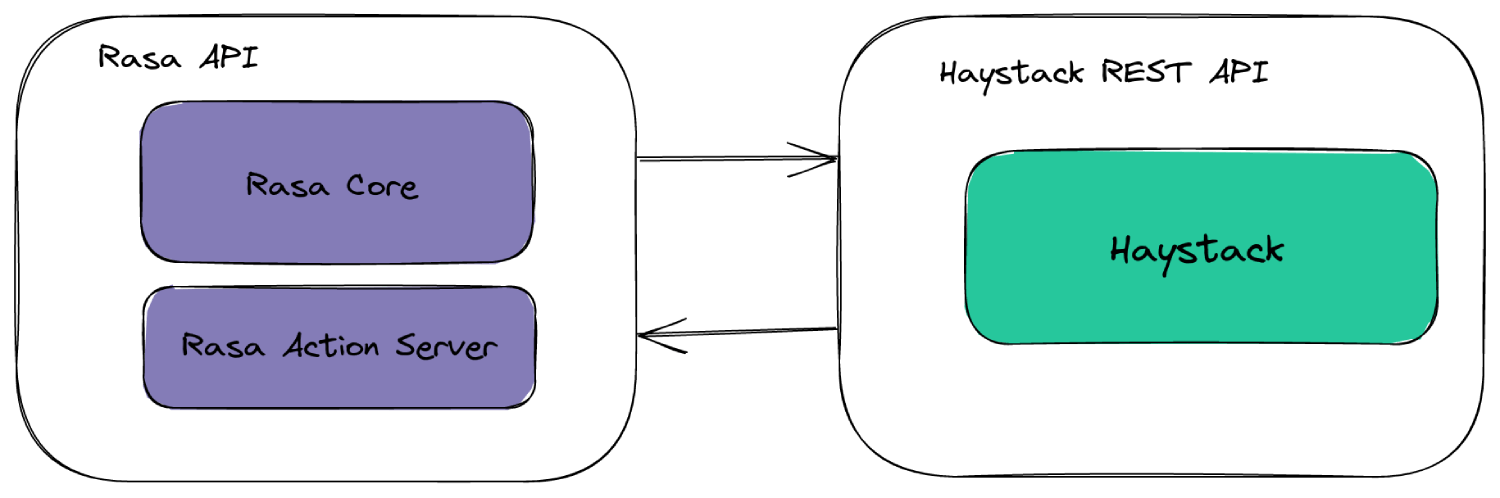

Rasa Core will handle everything to do with dialogue flow, classifying utterances by intent and triggering actions. It is a great platform for defining new intents and we are going to teach a chatbot to recognize information seeking utterances. When these utterances are detected, we will want the chatbot to trigger the appropriate action in Haystack. This is achieved via the Rasa Action Server which is a separate service from Rasa Core that allows for the chatbot to interact with external services.

The external service that handles the information intents of this system will be a Haystack question answering pipeline. This is composed of a database filled with relevant text documents, a retriever that quickly identifies the sections of your corpus that might be useful, and a reader which closely reads the text and highlights the specific words, phrases or sentences which answer the user query. Thanks to the REST API support in Haystack, it is easy to get Rasa to communicate with Haystack. Apart from this, we just need to define a pair of functions that will translate a user utterance into a Haystack query and the Haystack output into an appropriate chatbot response.

Haystack can also be useful for fallback situations. In cases where the chatbot cannot easily classify the user's utterance into any of its predefined intents, Haystack can be called to help respond to the utterance which the chatbot would otherwise not know how to deal with.

The next section will show you the lines of code that are needed to build an augmented chatbot but If you'd like to see one that we prepared earlier, then please check out our reference implementation here.

Implementation

The first step is to set up a Haystack service that is accessible via REST API. If you're new to the concepts in Haystack, we have many resources to get you up to speed. A good starting point would be our tutorial or our introductory article — both will give you an understanding of what lies under the hood. Ultimately the configuration of this question answering system is up to you. It could be a light and efficient system that uses an Elasticsearch database with a faster reader model. Or it could be a Milvus database that uses a dense passage retriever and a large Transformer model as the reader. Both are possibilities in Haystack. Just make sure to deploy your pipeline with the REST API so that it can be called by Rasa. You can learn to do just this in this blog article.

On the Rasa side, you'll want to start by installing the package and initializing a new chatbot project. We will then need to make some changes to various configuration files to get our chatbot ready for Haystack. The result of these changes, once again, can be seen in our reference implementation.

To start, we will want to define a new intent by providing some example utterances. Here, we will define the knowledge_question intent in the data/nlu.yaml file.

nlu:

- intent: knowledge_question

examples: |

- Can you look the capital of Norway?

- What is the tallest building in the world?

- Who won the 2018 Champions League?

- Where is Faker from?

- Can you tell me who the Prime Minister of Australia is?

- Why do leaves turn yellow?

- Do you know who stole Christmas?

- When is Easter?Next, you will want to define what action is taken when that intent is identified in the data/rules.yml file.

- rule: Query Haystack anytime the user has a direct question for your document base

steps:

- intent: knowledge_question

- action: call_haystackNote that instead of defining the knowledge_question intent, you can also use the fallback intent to trigger Haystack. This way you only query Haystack in situations where none of your defined intents is a match. You will need to add the FallbackClassifier to the pipeline in config.yml

pipeline:

- name: FallbackClassifier

threshold: 0.8

ambiguity_threshold: 0.1You will also need to add the following to data/rules.yml

- rule: Query Haystack whenever they send a message with low NLU confidence

steps:

- intent: nlu_fallback

- action: call_haystackBy default, the Rasa custom action server API is blocked so you will need to uncomment this line from endpoints.yml to get it to work.

action_endpoint:

url: "http://localhost:5055/webhook"Finally, we can define an action function that calls the Haystack API and handles the response in acitons/actions.py. By default, the Haystack REST API is located on port 8000, hence the URL in the code below.

class ActionHaystack(Action):

def name(self) -> Text:

return "call_haystack"

def run(self, dispatcher: CollectingDispatcher,

tracker: Tracker,

domain: Dict[Text, Any]) -> List[Dict[Text, Any]]:

url = "http://localhost:8000/query"

payload = {"query": str(tracker.latest_message["text"])}

headers = {

'Content-Type': 'application/json'

}

response = requests.request("POST", url, headers=headers, json=payload).json()

if response["answers"]:

answer = response["answers"][0]["answer"]

else:

answer = "No Answer Found!"

dispatcher.utter_message(text=answer)

return []You will also want to declare this new action in the domain.yml file.

actions:

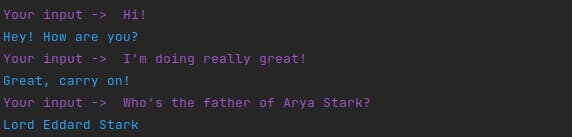

- call_haystackRunning the Chatbot

Whenever changes are made to your Rasa project, you will want to retrain your chatbot.

$ rasa trainYou will also need to start the Rasa action server.

$ rasa run actionsTo start interacting with the bot you have created use the following command.

$ rasa shellNow you can start talking to the Haystack enabled chatbot!

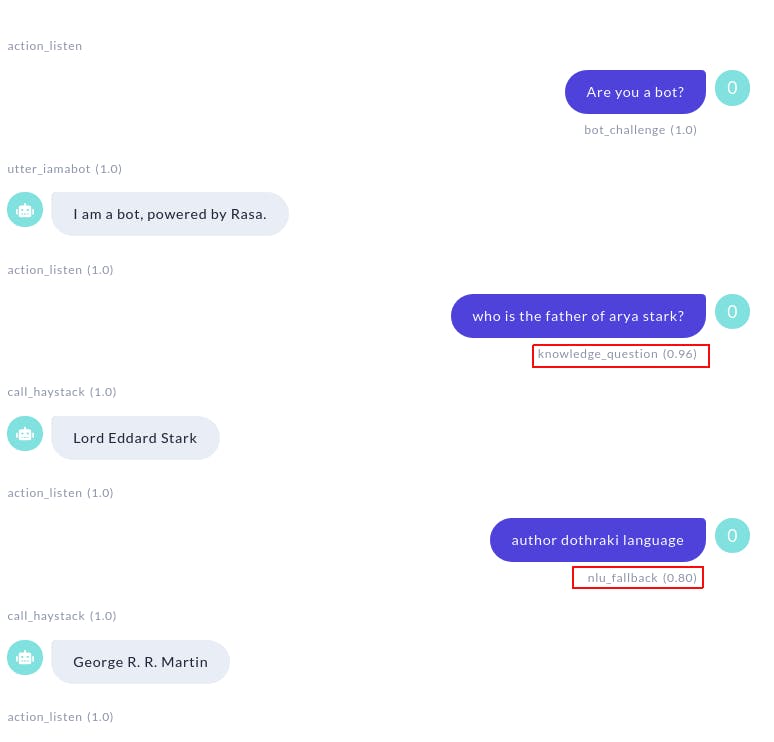

Alternatively, you can use Rasa X which allows the chatbot to be run in a GUI. While interacting with you chatbot in this interface, you will also see the intent being assigned to each message as well as the action being taken.

Further Improvements

Congratulations if you made it this far! You now have a Rasa chatbot with the question answering power of Haystack! That said, there are still many directions you can go in to make this chatbot even better.

For example, you could create different intents for questions about different domains. You might want to route your finance questions to a Haystack pipeline that is geared towards finance, while questions about legal issues are passed on to a system that is loaded with, and trained on legal data. This starts with giving more examples of new intents and retraining the Rasa model to become good at differentiating between the topics. Each topic should then have its own action, each of which should call a different Haystack service.

Also, when we were defining the function that parses Haystack's output and composes a chatbot response, we were only making use of the answer string. In fact, Haystack returns a lot more useful information such as the identifier of the document from which the answer came, the context around the answer string and also the confidence with which it made this prediction. Presenting these extra fields to the user enables them to navigate to the answering document, understand why that specific answer is being returned and find more relevant information.

Conclusion

Chatbot integration is one of the most requested features in the Haystack repository. It's not at all surprising considering how much both technologies enable humans to interact with data through a natural language interface. Now that it is here, we hope to see many more chatbots in the powerful combination of Rasa and Haystack. We're looking forward to seeing what you'll build with it!