PRODUCT

Enhancing Intelligent Document Processing with LLMs in deepset Cloud

Gain actionable insights by extracting information from your data at scale

24.04.24

Document intensive processes require skilled analysts to perform a thorough evaluation of the case at hand. This often involves reviewing detailed records that may contain hundreds of documents.

Examples include:

- Legal, financial, and corporate transaction due diligence.

- Compliance and regulatory reviews.

- Audit and assurance activities.

- Contract analysis and management.

Enter knowledge extraction with large language models (LLMs): our cutting-edge solution in deepset Cloud that radically changes the way large document sets are analyzed, while allowing users to review and share the results on the fly.

Intelligent document analysis (IDP) is a collection of tools that automate and streamline time-consuming document analysis processes. For an overview of how LLMs are revolutionizing IDP, check out our previous blog post, which includes real-world examples from our user base.

In this blog post, we'll dive a little deeper into the inner workings of IDP with LLMs in deepset Cloud.

A quick introduction to deepset Cloud

deepset Cloud is the LLM platform where cross-functional AI teams come together to build shippable products. It includes templates for the most common LLM setups, such as retrieval augmented generation (RAG), as well as pre-built workflows for repetitive tasks, based on years of experience applying AI to real-world use cases.

Product teams can focus on building and improving their applications while the platform handles AI deployment and operation. It supports all phases of the AI product lifecycle, including experimentation, prototyping, evaluation, and observability.

Many of our customers have complex document sets that need to be processed consistently. LLMs are the most natural solution for extracting structured information from unstructured data.

Asking questions to obtain knowledge

Most people are familiar with LLMs in the context of text generation, chat interfaces, and, increasingly, multimodal applications. All of these process novel, spontaneous queries by users.

But in streamlined document analysis, such as due diligence processes, the same type of information must be manually extracted each time by sifting through large volumes of data. For example:

- Does the company have adequate assets to cover its current and projected liabilities?

- Are there any pending or potential legal issues?

- What intellectual property does the company own?

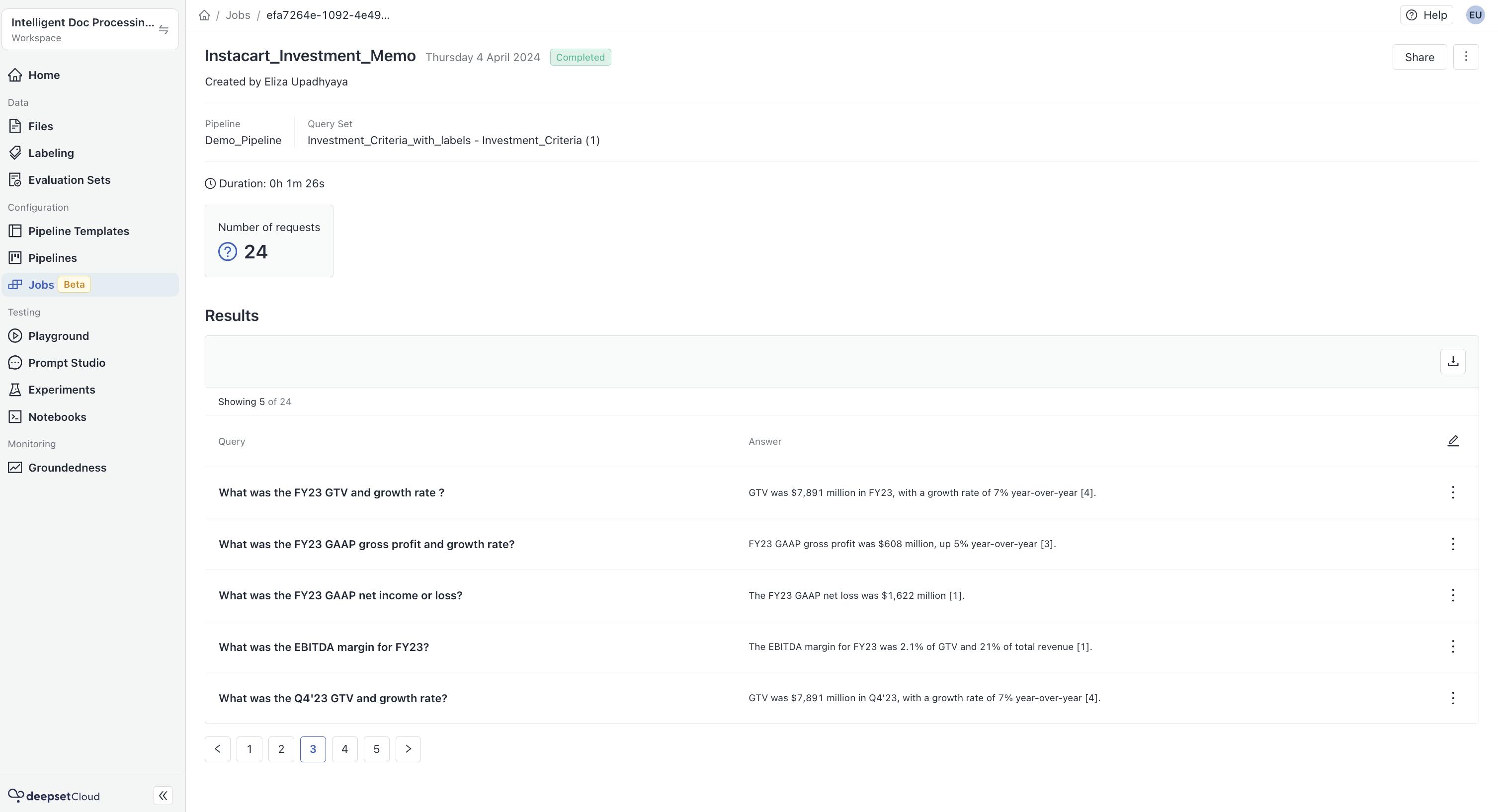

Knowledge extraction in deepset Cloud lets you define queries in advance, run an extraction job in the background, and obtain knowledge on the fly.

How to set up a knowledge extraction job in deepset Cloud

deepset Cloud is both vendor agnostic and modular. This means you can use LLM setups of varying complexity and with any model for your knowledge extraction job: whatever works best for your use case.

The platform's iterative refinement process allows your team to build the LLM pipeline, test the query set, and tweak the prompt before committing to a specific setup. deepset Cloud was built with the understanding that AI products require many development cycles. With features optimized for the product development lifecycle, AI teams can:

- Decide on the optimal LLM setup configuration.

- Optimize hyperparameters.

- Write the ideal prompt.

- Determine how to phrase queries for high-quality, robust results.

Prompting for success

Prompts are the de facto user interface for any LLM. Using prompts, AI practitioners guide LLMs to optimal output, both in format and content. deepset Cloud provides tested prompt templates that users can customize or use out of the box.

In the case of knowledge extraction for IDP, the prompt should guide the LLM to provide references for each assertion it makes (for easy fact-checking). In addition, the model should return short and factual information rather than full sentences, so that it can then populate a predefined memo template with this data.

Unlock and share information instantly

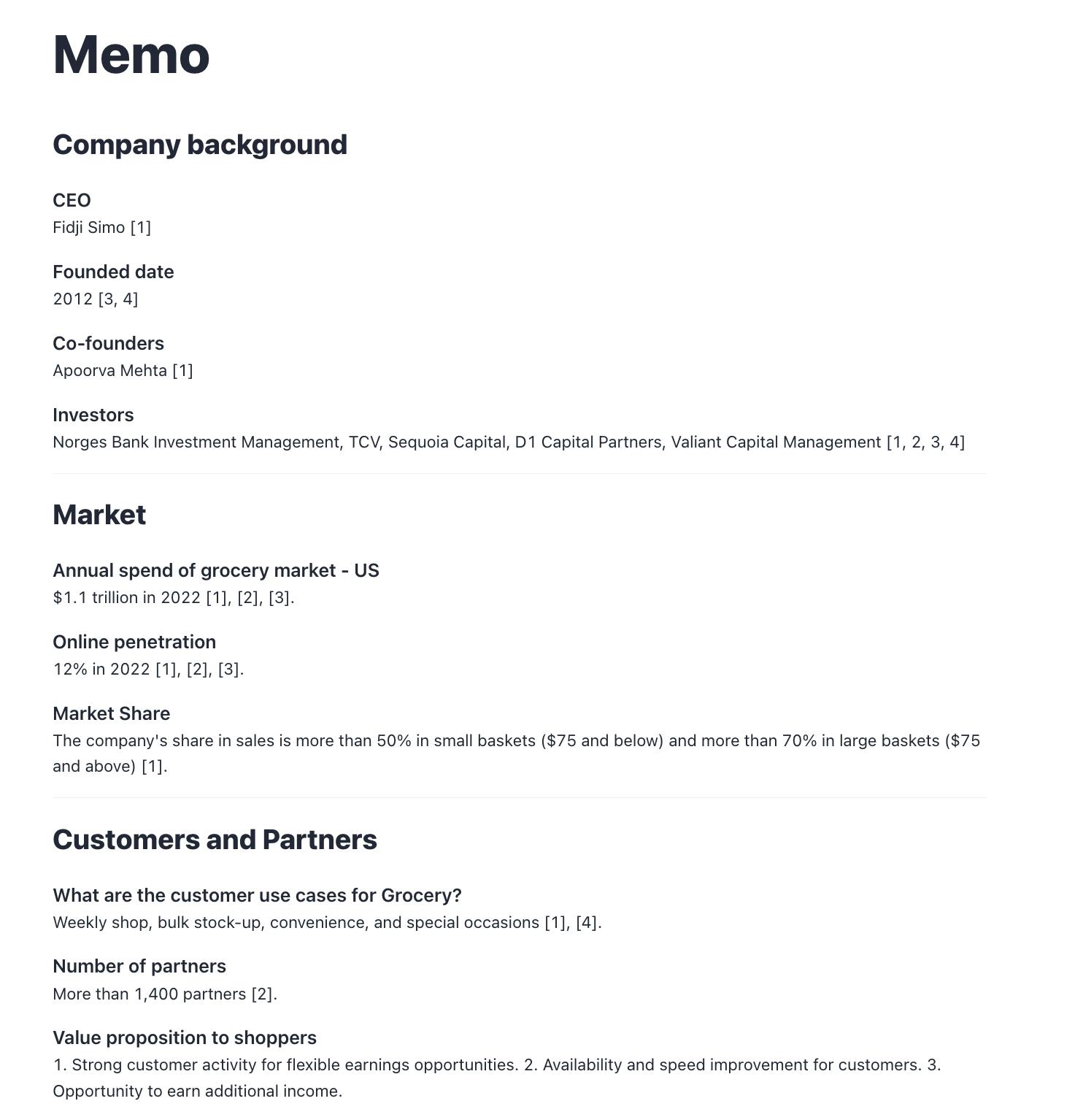

Based on the extracted information, deepset Cloud will generate a memo that contains all the relevant data from the underlying document set.

A human analyst can easily fact-check this information by verifying that it appears in the referenced documents. Once they're satisfied with the results, they can share the link with other stakeholders. In other words, the insights gained in deepset Cloud can be translated into action immediately.

IDP with LLMs: A new standard for knowledge management

LLMs finally put the intelligence in IDP: powered by these models, the knowledge extraction approach with query sets allows organizations to significantly streamline their document set analysis. This frees up subject matter experts to apply their critical thinking skills to the model results and review more cases in less time.

Far from being redundant, human expertise is still very much needed to verify and refine the model's results. However, the repetitive and tedious parts of the process are handled by the LLM.

If you're looking for ways to extract knowledge from your data using AI, and want a tested, scalable solution, we'd love to hear from you.