Parameter-Tweaking: Get Faster Answers from Your Haystack Pipeline

28.06.21

This article is the first in our series on optimizing your Haystack question answering system. We’ll link to the other articles here as they go online:

- Parameter-Tweaking

- Accelerate Your QA System with GPUs

- Picking The Right Reader Model

- Metadata Filtering

There are many ways you can optimize a parameter-rich system like a Haystack question answering pipeline. Specifically, the length of your documents and your setting for the top_k_retriever parameter can have an enormous impact on system performance. In this post, we’ll show you the most important tweaks to speed up your Haystack question answering system without sacrificing the quality of its results.

The Haystack Pipeline

To understand the impact of these parameters, let’s quickly look at the four stages of a Haystack question answering (QA) pipeline:

- Preprocessing: Here, we read in our document collection and convert it to a list of dictionaries. Additionally, we may use the PreProcessor class to modify our data.

- Document Store: We pick the database that best fits our use case, and feed our preprocessed documents to the database via a document store object.

- Retriever: Choosing between “sparse vs. dense” retrieval methods comprises a crucial architectural decision. In this step, we index our database by means of the retriever, thereby making our documents searchable. Sparse methods are faster at indexing, while dense ones are powered by transformer-based language models.

- Reader: In this step, we pick a model to perform question answering on the documents preselected by the retriever.

The reader and retriever are commonly chained together by use of pipelines. This allows the user to send only one command to ask a question, which then triggers actions from both the reader and retriever.

Both the preprocessing and retrieval steps offer opportunities for optimization, the most important of which we’ll show you in this article. Let’s start off with document length.

Increasing Pipeline Speed via Document Length Optimization

A document in the question answering context is simply a passage of text of arbitrary length. Adjusting that length to the optimal value can go a long way to improving your system’s speed. The trick is to split your knowledge base into documents that are both a) neither too short nor too long, and b) as informative as possible.

The PreProcessor class will help us segment our documents into passages of the optimal length. Let’s have a look at the parameters defined in its __init__ function:

class PreProcessor(BasePreProcessor):

def __init__(

self,

clean_whitespace: bool = True,

clean_header_footer: bool = False,

clean_empty_lines: bool = True,

split_by: str = “word”,

split_length: int = 1000,

split_overlap: int = 0,

split_respect_sentence_boundary: bool = True)We combine split_by and split_length to define our document length. split_by sets the unit for defining split length — e.g., “word,” “sentence,” or “passage” (defined as a paragraph). Since sentence and paragraph length may fluctuate widely, splitting by word is the best choice for most use cases. split_length then determines the number of words contained in a document.

However, splitting at the word level does not come without issue. A sentence may lose its context when split somewhere in the middle. To avoid any such unwanted behavior, you may turn on the parameter split_respect_sentence_boundary. When set to “True,” this parameter ensures that splitting only occurs at sentence boundaries. At the same time, it prevents longer sentences from being split up, even if they exceed the value defined for split_length.

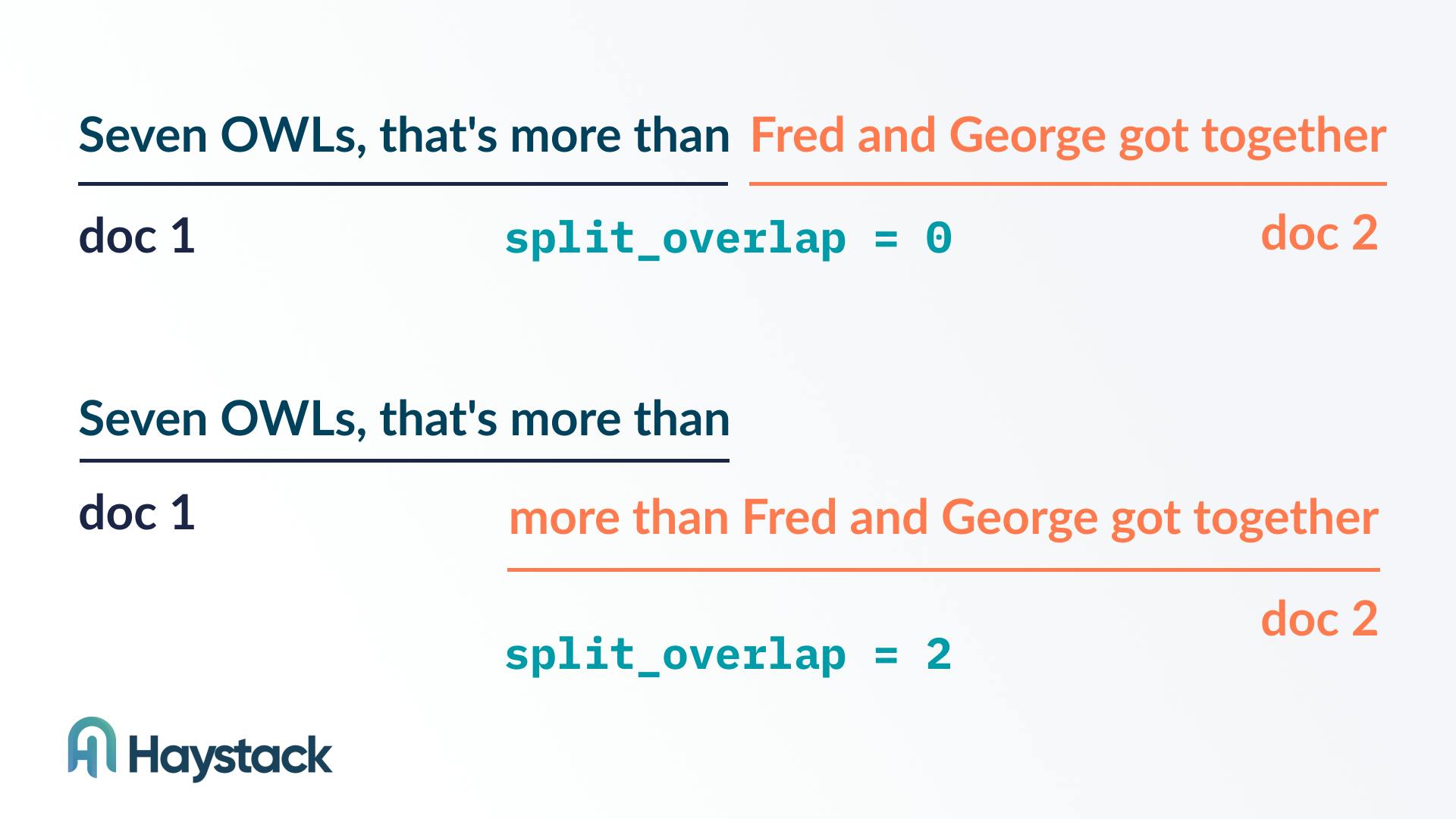

Another method for minimizing the risk of losing the syntactic context of a sentence after splitting is to define an overlap of a few words between documents (the “sliding window” method). By setting the split_overlap parameter to a positive integer, the words at the seam of a split are included in both resulting chunks. Keep in mind though that a high overlap will inflate your corpus as overlapping sections are featured twice. Here's how the split_overlap parameter works:

Document-splitting with dense methods

Dense retrieval methods like DPR (dense passage retrieval) use encoders both during indexing and querying. A Transformer-based model processes documents and encodes them as dense vectors of a fixed length (usually 768).

The dense passage retriever has a limit for the number of tokens it can process at once, which is set to 256 by default. The encoder will simply shorten any passages exceeding the limit. Thus, to ensure that all of our passage contents get encoded, we’ll need to avoid using overly long passages. We recommend 100 words per passage, which is the document length that the DPR model is trained on:

processor = PreProcessor(

split_by=’word’,

split_length=100,

split_respect_sentence_boundary=True)Document-splitting with sparse methods

Sparse retrieval methods do not use encoders and are therefore much faster than dense retrievers. These methods represent documents as sparse vectors (made up of mostly zeros) that consist of the weighted counts of their words.

Since sparse vectors are not enriched with the linguistic knowledge of deep language models, they have no understanding of semantic similarity between words. Therefore, when working with a sparse method, we rely entirely on the actual query keywords appearing in the texts. This calls for documents that are longer than the dense representations described above. But documents should not be too long — otherwise the rest of a document may wash out our passage of interest. We recommend a split_length of 500 words when working with a retriever-reader pipeline.

Shorter documents also ease the reader’s computational load, especially when using a sparse retrieval method. While results may vary between runs, our experiments show that doubling the document length from 500 to 1000 words slows down the query pipeline by about fifty percent. For instance, a query might take one second to run on the shorter documents and 1.5 seconds on the longer ones. This can make a big difference when you want to scale the number of queries to your system.

A variable with an even bigger impact on your system’s runtime is the number of documents that the reader receives from the retriever. We tweak it using the top_k_retriever parameter.

Get Faster Answers by Adjusting top_k_retriever

top_k_retriever gets its name from the variable “k” which is often used for integer hyperparameters in machine learning contexts. To understand why this parameter has such a high impact on our system’s performance, let’s briefly recap what we know about both the retriever and reader, as well how the two interact.

Retriever vs. Reader

The retriever and reader are both indispensable elements of a question answering pipeline. The retriever’s role is to sift through a large amount of documents by performing quick vector computations to find the best candidate documents for the reader. The reader then looks at only these candidates, passing them through a pre-trained transformer model and determining which answers best fit a query. If the retriever were a large-scale excavator, the reader would be a pickaxe.

Speed is a major point of comparison for the two components. Because the reader is so much slower than the retriever, the number of documents that the retriever ultimately selects as answer candidates can make a big difference. We adjust that number by way of the top_k_retriever parameter, either when running the pipeline or upon the retriever’s initialization (where it’s simply called top_k).

Working with top_k_retriever

If your system is too slow, try lowering top_k_retriever. In the below example, we’ll use a subset of the Harry Potter Wiki (from https://harrypotter.fandom.com under CC-BY-SA license). To get the best answer possible, we’ll set top_k_retriever to 100 documents. Let’s put everything together and ask our question:

query = “Who is Dobby’s owner?”

timed_run = timer(pipeline.run)

answer = timed_run(query=query, top_k_retriever=100, top_k_reader=1)

>>> Time elapsed: 5.86 seconds

short_answer(answer)

>>> Answer: The Malfoy family, with 84 percent probability.Our system gave a reasonable answer, and it only took seconds. But what if I told you that we could have obtained an equally good result in a fraction of the time? Let’s see what happens when we set top_k_retriever — the number of documents seen by the reader — from 100 to 10.

answer = timed_run(query=query, top_k_retriever=10, top_k_reader=1)

>>> Time elapsed: 0.75 seconds

short_answer(answer)

>>> Answer: The Malfoy family, with 84 percent probability.We got the same answer as before — but with only 10 documents to look at, our reader required much less processing time. This amounts to a dramatic increase in performance when scaling the number of queries. Have a look at this tutorial to learn more about evaluating your system.

Conversely, if you’re unhappy with your system’s answers and time is not an issue, you can try increasing top_k_retriever to feed a larger variety of answer candidates to the reader.

Because top_k_retriever so heavily impacts the reader’s processing time, some may be thrown off by its name, especially seeing as there’s a top_k_reader parameter, too (the number of answers returned by the reader). But it only takes some time to get used to — we promise.

Start Optimizing Your Haystack Pipeline

In this article, you learned how to increase your system’s speed by tweaking the top_k_retriever parameter and hitting the right document length. Keep an eye out for our next post, where we’ll show you how to work with metadata to get even faster answers.

To get started with Haystack now, check out our GitHub repository. (A star is always great too! :)